What experiences can make someone more wise? Can objects hold solutions for problems we don’t know we have?

Click here to listen to the podcast

As a cognitive neuro-psychologist at the University of Chicago, Dr. Shannon Heald focuses on understanding how our experiences shape and guide how we perceive the world, how we communicate with one another, and how we think and behave. John Veillette is a doctoral student and National Science Foundation graduate fellow in the Integrative Neuroscience Program at the University of Chicago working with Center for Practical Wisdom founder Dr. Howard C. Nusbaum.

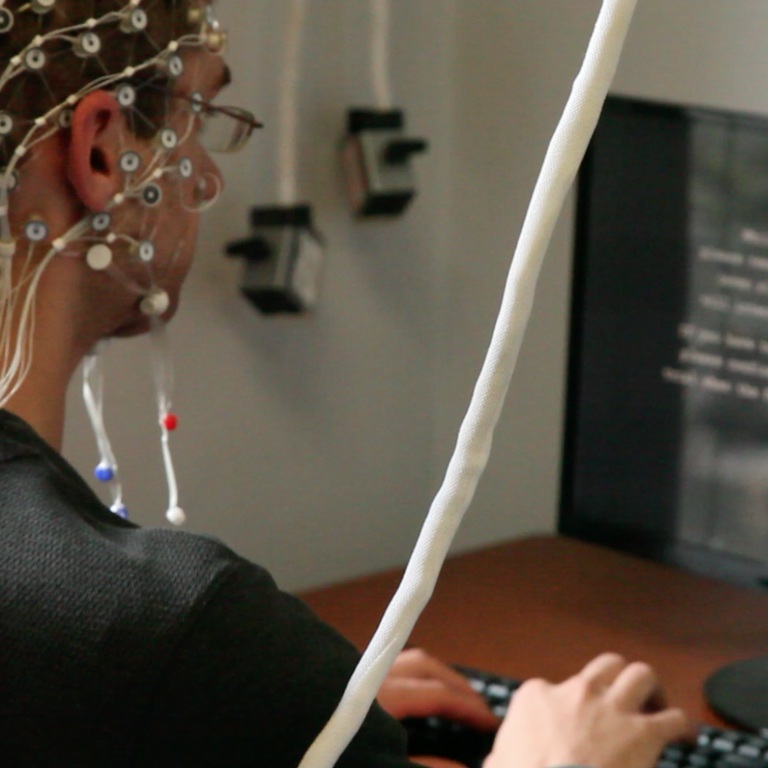

As researchers in the Attention, Perception, and Experience Lab, their work utilizes methods from multiple disciplines including behavioral research methods, analytic methods of linguistics, electrophysiology/electroencephalography (EEG), and fMRI as well as specializing in systems-level neuroscience methodology and neuro-forecasting.

In this podcast of Conversations on Wisdom, we discuss their current work in creativity, mathematical models of the brain, and what we could potentially see in terms of brain states associated with wisdom and artificial wisdom.

Listen to the podcast on our SoundCloud Page!

Read articles and publications on Artificial Wisdom and Artificial Intelligence below:

- Catlett, C., Beckman, P., Ferrier, N., Nusbaum, H., Papka, M. E., Berman, M. G., & Sankaran, R. (2020). Measuring Cities with Software-Defined Sensors. Journal of Social Computing, 1(1), 14-27.

- Clegg, S., Berti, M., Simpson, A. V., & Cunha, M. P. E. (2020). Artificial Intelligence and the Future of Practical Wisdom in Business Management. Handbook of Practical Wisdom in Business and Management, 1-18.

- Jeste, D. V., Graham, S. A., Nguyen, T. T., Depp, C. A., Lee, E. E., & Kim, H. C. (2020). Beyond artificial intelligence: Exploring artificial wisdom.International Psychogeriatrics, 32(8), 993-1001. doi:10.1017/S1041610220000927

- Lee, E. E., Torous, J., De Choudhury, M., Depp, C. A., Graham, S. A., Kim, H. C., ... & Jeste, D. V. (2021). Artificial Intelligence for Mental Healthcare: Clinical Applications, Barriers, Facilitators, and Artificial Wisdom. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging.

- Nusbaum, H. C. (2020). How to make Artificial Wisdom possible. International Psychogeriatrics, 32(8), 909-911.

- Trafton, A. (2020, October 2). How we make moral decisions: In some situations, asking “what if everyone did that?” is a common strategy for judging whether an action is right or wrong. MIT News Office. Retrieved from https://news.mit.edu/2020/moral-decisions- universalization-1002

- UCSD Health Sciences. (2020, July 9). Artificial wisdom: Beyond intelligence. Retrieved from https://ucsdhealthsciences.tumblr.com/post/623203901021323264/artificial-wisdom-beyond-intelligence-from

Join the conversation on wise brain states and Artificial Wisdom on our social media: Facebook, Twitter, and LinkedIn

Read the transcript of our conversation below:

Dr. Shannon Heald: I happen to have a belief that wisdom, if one has it, it's by proxy of some experiences you've had in your life. Instead of just saying, ‘Let's find wise people,’ I'm interested in what experiences I can give someone to make them more wise. And I have chosen to think about creativity more than wisdom, per se, because I think it's a much more tractable problem. But I think this conversation that we're having is interesting because it's highlighting for John and myself, that a lot of our methods and a lot of our approaches could be applied to answer these types of questions in terms of wisdom and using computers to make people wise. I was just looking at the title of this page that we wrote together, and I did not name it but John did, and I think that is indicative of its title, which is called I'm not wise, but maybe my brain is?

Jean Matelski Boulware: Over the past few weeks, I've had some time to think about the changing world we are currently living in, and to ponder what things will look like once we really begin to open up again. In this, I've been wondering about the role of wisdom and experiences play and how our local and world leaders could make the most wise decisions. A few recent articles on the concept of artificial wisdom got me thinking about what that looks like.

I turned to Dr. Shannon Heald and doctoral candidate, John Veillette from the University of Chicago’s Attention, Perception, & EXperience lab for a fun yet informed discussion to try to wrap my brain around artificial wisdom and the role of computers and machine learning in helping people to make more wise decisions. From the University of Chicago Center for Practical Wisdom, I'm Jean Matelski Boulware, and this is a podcast of Conversations on Wisdom.

Shannon: I'm a professional researcher in the psychology department. I'm a cognitive psychologist by trade. I think very broadly speaking, my research interests are about the role that experience plays in informing how we see the world, how we communicate with others, and how we make decisions and solve problems. And so I approach this from problem-solving most often in my work and thinking about what makes someone a better problem solver. And certainly, when we think about someone being wise, we think of them as being this wonderful problem solver.

Yoda: "The greatest teacher, failure is."

Shannon: And so I approach this notion that I'm interested in problem-solving and perception and the role that experience plays in sort of granting those things as skills. I definitely fall very hard under the umbrella of wisdom could be thought of as skill- a skill of perception - that's embodied by some sort of experiences that we've had in our life.

John Veillette: My research is a little bit hard to put under an umbrella as Shannon's is as well. I guess I describe it as how we maintain and update internal models of the world and how those models change how we interact with and experience the environment. My research ends up focusing more on things like motor control and perception- kind of low-level processes, but I'm also interested in making models of higher-level cognitive processes.

Shannon: Because of his interest in models, he's allowing us to answer the question of what is the role that statistics or machine learning can play in understanding wisdom or understanding any of these topics that we might be talking about. Not to put words in your mouth John, but that's the fun part about working with you is that we sort of broaden our research program in that way.

Jean: While many may not connect the process of perception with problem-solving, Shannon explains to us why the process of perception is central when thinking about problem-solving and creativity.

Shannon: When we think about creativity, it's in the context of problem-solving. You have a problem and then I give you a solution and you're like, "That's creative." When we think about solution finding, we definitely think it's in relationship to a problem. We think we need to have this problem in order to solve things.

Oftentimes, when we're looking around the world, we can see objects around us and we can think about their potential uses in trying to understand them. So for example, if I walk into the kitchen, and I don't have a goal, I might look at the world around me and go, "Oh, there's many action possibilities for me," I can be like, "Look, there's a cookie sheet, I can go make cookies. Look, there's my computer, I can go and write a paper." And I can use my environment and the objects around me to dictate what it is that I could do…and if you start thinking about the world as inspiration as potential solutions, you start to see that perceptually understanding the world is really important when solving a problem.

A good way to think about this is when I have a problem or goal, and it's to hammer something, I look around and everything turns into a hammer. And that's pretty creative, right? And so this idea that the recognition of objects is tied to the functional use of those objects sort of has this nice, illuminating point…and that is, we can start to think of objects as holding solutions to problems we might not know we have. And that frees solution finding from being subject to a goal or a problem and it puts it at a more prime level of just perception itself.

Jean: Now, the concept of functional fixedness came up multiple times in our conversation. It's this inability to recognize things as being able to perform other functions than its original use. Shannon explains this with a few examples in its role in creativity. She also talks a little bit about open monitoring, which involves being aware of feelings, thoughts, and sensations, both internally and externally. I like to think of it as being hyper-aware to decrease mind wandering.

Shannon: I think there's some people who display more creativity around their uses of objects where they're not tied to that canonical use of like what are bricks for. They're like, "Oh, I'm not a bricklayer, and I'm not building houses today. So I, therefore, I have none uses for the bricks," right? [laughs] But you know, obviously, there's some people who when they look around the world, they recognize objects as holding a solution for them. So they'll go, "Oh, I need to make a pendulum today. I guess I could use a hammer for that." Even though hammers, they're used for nailing things.

This sort of ties to this idea of serendipity. We have this notion of serendipity in the research community, we think of it as these chance discoveries. So, antibiotics is a serendipitous finding. Velcro is another one. We really, as a society, we're like, "Okay, well, since it's chance, there's no way for us to sort of engineer their occurrence other than for us to just do our thing, and then they're just going to happen."

But of course, we have Louis Pasteur who says "Chance favors the prepared mind." And I think that sort of gives us license to start to think of this perceptual ability to recognize solutions to problems we didn't know we had, as that prepared mind - thinking of it as a form of creativity, a form of insight that's different than our traditional sense of insight that allows us to go beyond anyone goal that we may have, and to actually see new goals that we can adopt based off of coming into contact with an object.

A good example of this is Velcro where George de Mestral- the person who discovered Velcro- was walking around one day and was looking at his environment and was like, "Hey, I noticed a burr stuck to my pants." And when he was looking at the burr; you know, any one of us could have just said, "Hey, it's a burr, I'm going throw it off my pants, it's not that exciting." But he was sort of captivated by it as an object. He put it under a microscope. He took a look and he goes, "Well, this is a really a great way to fasten things". And he wasn't looking for a way to invent a better way to fasten things. What I would argue is that he had a prepared mind to recognize that object or a feature of that object as a solution to a problem he didn't know he had.

Jean: Do you think that people who tend to make more wise decisions are more aware of doing this with frequency?

Shannon: So, to the degree that the open monitoring is tied to a wise practice, and the fact that this wise practice might open the door to more solution-finding, absent of a goal, this state of being aware of possibilities, I think, really goes well with this creativity literature. And people who meditate, which we know is tied to wisdom, [chuckles] if maybe they do this type of meditation, I do think that that open monitoring might be particularly helpful for encouraging sort of a natural disposition to finding these need-solution pairs. Is it wise? I don't know. You know, that's I think an empirical question.

Jean: As our discussion continued, I was curious as to John's role in this research on brain states and wisdom, and the mathematical models of the brain that might represent this.

John: It'll be a bit of a tangent away from creative problem solving for a second, but then we'll loop back because it connects. On the topic of classifying states from people's brains, there is a recent history of papers that are basically just trying to predict what decisions people will make or what types of decisions they make while they look at certain stimuli. So if I could see your brain while you were shopping on Amazon, could I predict what products you’d buy? Brian Knutson's group at Stanford thought, ‘Well, what if instead of just predicting what products this individual shopper was going to buy, we could also predict what products are going to succeed in the market?’ Can I predict what you're going to buy from my brain?

We went back and we said, "Okay, we have these sort of ideas about the role of early perception or our understanding of objects being rooted in perception and how we value objects being related to what we can use them for." We thought that it might be useful to go back and look at- in the same sort of paradigm in which people are given money and they're making decisions- and [look] not just where the activity is that's related to that actually scales to predict crowd outcomes, but also when it happens relative to when you see the stimulus.

And as it happens, we see really early on around 160-200ms after a stimulus is presented to you - which there's a good bit of research that would suggest that's even before you're consciously aware of the stimulus- we can already predict above chance whether the project is going to be funded. So it's not as if this information doesn't exist in the person, it's just that they don't have conscious access to it. And when we're thinking about insight and creative problem solving, these things that result in like an ‘aha moment’, as cognitive psychologists we like to think about not this sort of phenomenological ‘I'm having an experience of an aha moment’ but what's the process that actually leads up to that?

And so the idea that by predicting this- by predicting not an individual's behavior from their brain- which is the classic paradigm of neuroimaging studies- but instead predicting the group's behavior, someone else's behavior from an individual's brain seems like a very compelling way to draw out this sort of ongoing cognitive process that we believe is happening, but don't really have a way of querying in real time, because people can't consciously report on it or act on this process yet. It doesn't impact behavior until much later.

Shannon: And even beyond the neuro-forecasting, the idea here is that to the degree that we can identify what subset of neural activity is predictive of, let's say, group level behavior or creative outcomes in problem-solving, the idea is we could potentially use brain-computer interfacing to help people attend to that activity. We can have a computer, basically notify them via a light, via a sound [bell sound] that says, "Hey, you're in a great place right now to be creative" or "No, not yet. Don't, you know, you're not in that state yet." And giving people some external awareness of what the computer is noticing about their brain state. And the idea is by having computer interfacing, we might be able to cultivate individuals into becoming, let's say, super creative solution finders or super forecasters.

First, there's the question of if I turn that feedback off, are you going to be more insightful now that it's off? I think, you know, that's a separate question. And then the second is: Now, let's say you are. If I give you another insight task, is that still going to transfer? John has pointed out, we have this nice study that he's done where we've demonstrated we are looking at very early neural activity that is predicting crowd outcomes. And as such, we're in this nice place to start playing that game of forecasting in terms of finding objects that are more innovative than other objects.

Jean: As researchers, we have many ways to try to measure and quantify wisdom. Everything from self-report methods to wisdom tasks, and a range of theoretical perspectives to support these concepts and instruments. I was curious if we could put someone in a machine and measure them while performing a task like Grossmann’s SWIS, the Situated WIse Reasoning Scale, to see what a wise brain state might look like?

Shannon: Certainly, you could have people making wise decisions versus non-wise decisions, whatever that might mean, which of course, behaviorally, that has consumed research efforts for the latter part of this decade. [all laugh] And so sure! If you think that SWIS is the answer to that question and we know the opposite of SWIS, whatever that may be. In theory, we could try to classify brains based off of whether their doing SWIS-y things versus the non-SWIS things.

If you bifurcate scales based off of state versus trait, I think that's going to change what you're looking for in terms of brain state. There's some views of creativity that some people are more inclined to have insight versus others. And you can see that by just looking at the resting state of their brain, when their brain's not doing anything. Maybe people who believe that wisdom is a trait would want to take more of a resting state approach. And then individuals who are more about state-based, they would want to have you actually in a scanner, doing a specific problem, and then looking at what your brain looks like in that state.

And they might even take a component approach to wisdom, right? Where you don't have to be doing all three at once, like all three components of wisdom. But we might want to just say “What does it mean to be doing reflective wisdom more than others?” I think that's a separate question than looking for a person who's doing it all, the wise people in the world, whoever they might be, according to whatever traditions.

Jean: There was a recent article where some researchers did this sort of thing, not with wisdom, but with meditation. I thought it was interesting because of the wide range of types of meditation. And the researchers were successful in training a computer to be able to identify a meditative brain state.

Shannon: It's interesting that you bring up meditation, because one of the brain states associated with creativity and problem solving is open monitoring. And when we were given your question of what would be the brain states underlying wisdom, one easy one is sustained attention versus open monitoring, and one can ask a research question about which would be more particular for wisdom. One has to be careful in the way that they word that question because it could also be that in wise decision making, it matters at what stage in the question you're in and timing. So when a decision needs to be made now versus you have time you can use for reflection, that would be open monitoring, but the cognitive component of wisdom might be more sustained attention.

And so, thinking about wisdom as this monolithic thing, it's hard for me to know how to combine the components and think about those components as not just precursors, but think about them as scaffolding in time in some sort of hierarchy to achieve, let's say, a wise- what would be judged wise by others- as an outcome.

What does all that mean for wisdom? I think the very simple answer is we think we can use a very similar approach to wisdom that we've done with creativity and neuro-forecasting like market-level outcome. There's this idea, that to the degree that wise decision making is reflected by stable neural markers or components that we care about for wise decision making as associated with neural markers of some kind. And in theory, we can manipulate those states or use brain computer interfacing by tracking those states in order to promote wise decision making.

Jean: While we haven't seen anything specific yet on brain states associated with wisdom, there have been a few publications in the past year or so on this concept of artificial wisdom [see references above]. And while most of us might agree with Dr. Dilip Jeste and colleagues that the ultimate goal of artificial intelligence is to serve humanity, Dr. Howard Nusbaum points out in his commentary, ‘How to make Artificial Wisdom possible’ that research on AI does not follow a single overarching goal, such as serving humanity, much less imbuing AI with moral virtues.

Shannon: I have to say, I've thought about this question far more from a creative standpoint. What would it mean for a computer to start looking creative? When it offers a solution? Rather than tackle the wisdom question, because I think that's, for me, a step beyond creativity, because as I think a lot of people have demonstrated, there's multiple components to wisdom and there could be like a temporal aspect to how those components are assembled in order to have an outcome that in some circumstances, [would be] conceived of as wise by many people.

John: When I think about endowing a computer with like, some rudimentary form of wisdom, I think that we're just trying to get the computer to not do anything stupid.

Sophia the Android: Okay. I will destroy humans.

[laughter]

Shannon: Don't jump the gun. Consider three alternatives first. [laughs]

John: Sort of current models are dealing with a little bit better, but there's not a concrete solution for this problem of uncertainty estimation. How do we get these sophisticated models to estimate their own uncertainty about their prediction? So it can say, "Well, I think it's an airplane. But you know, honestly, I'm not too sure about that." [laughter]… in which case, you might not make any decisions based on that.

When you think about a creative machine, the things that might make the machine unwise- like categorizing things incorrectly- could be construed as the machine being really creative. You've trained a machine to identify (from pictures of objects) potential uses, and it give you a use that's really unexpected. You might be less inclined to say, "No, that's completely wrong," or wish it was better able to estimate that it just didn't know. You might say, "Oh, maybe we could use that object for that. I've never thought of that before."

Shannon: Right. You're like, "Wow, that's an innovative machine." [laughs] And really, it's just- it's tolerance for a different level of uncertainty for us. And so I think computers might be really useful in giving us alternatives to pursue. And so that's something that I'm interested in, instead of thinking about how we get the next data to be wise, but in thinking how can we leverage computers or machines to tap into like subsets of our neural activity that we just aren't conscious of. What training set material can we give to a machine so that we can be aware of other potential uses for objects that are sitting around us that we would never otherwise see? Because we are, to some extent, always functionally fixed. I am going to be somewhat constrained given my experiences. When I was explaining my research interests, I started by saying what experiences maybe could dictate someone becoming wise. And so we can ask, What is the training set we could give to a machine that would allow it to look at a situation and give a wise answer?

Jean: That was Dr. Shannon Heald and doctoral student, John Veillette, speaking to me from the Attention, Perception, & EXperience lab at the University of Chicago. While it's apparent from our conversation, that we are nowhere close to having computers with artificial wisdom, both Shannon and John were able to elucidate the research paths we might see in the near future- at least in terms of brain states and machine learning in developing creativity, if not wisdom.

As one might expect from a group of research scientists who have worked together for years, the conversation on machine learning, brain states, and artificial wisdom, quickly devolved into references about Data the sentient android from Star Trek, and Marvin the depressed robot from Hitchhiker's Guide. And finally, this:

John: So we've just identified the next frontier: where we can now move from wise AI and creative AI to judgmental AI. [laughter]…And that's going to be my contribution to the world. [chuckles]

Jean: From the University of Chicago, Center for Practical Wisdom, this has been a podcast of Conversations on Wisdom.

Click here to listen to the podcast on our SoundCloud page!